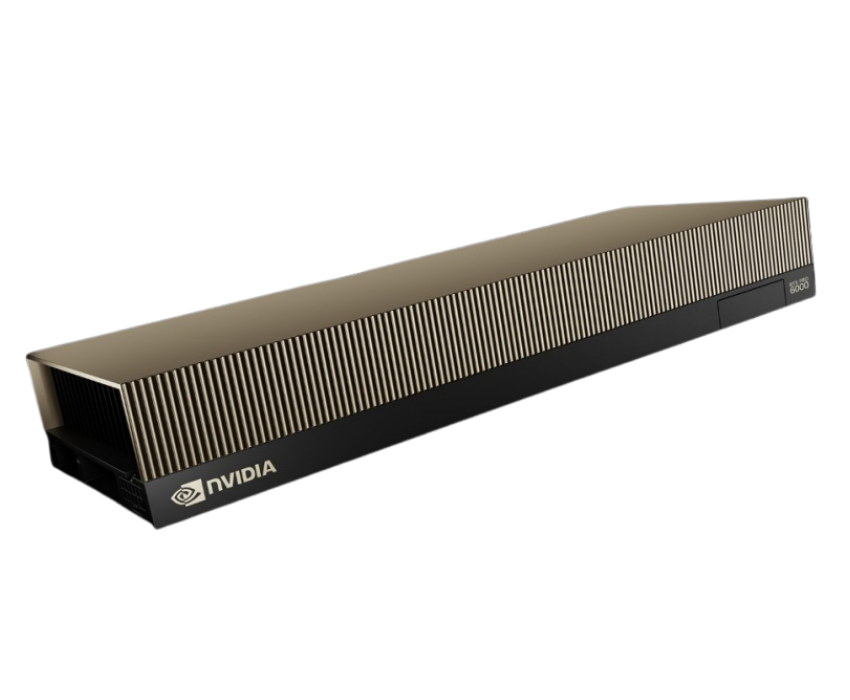

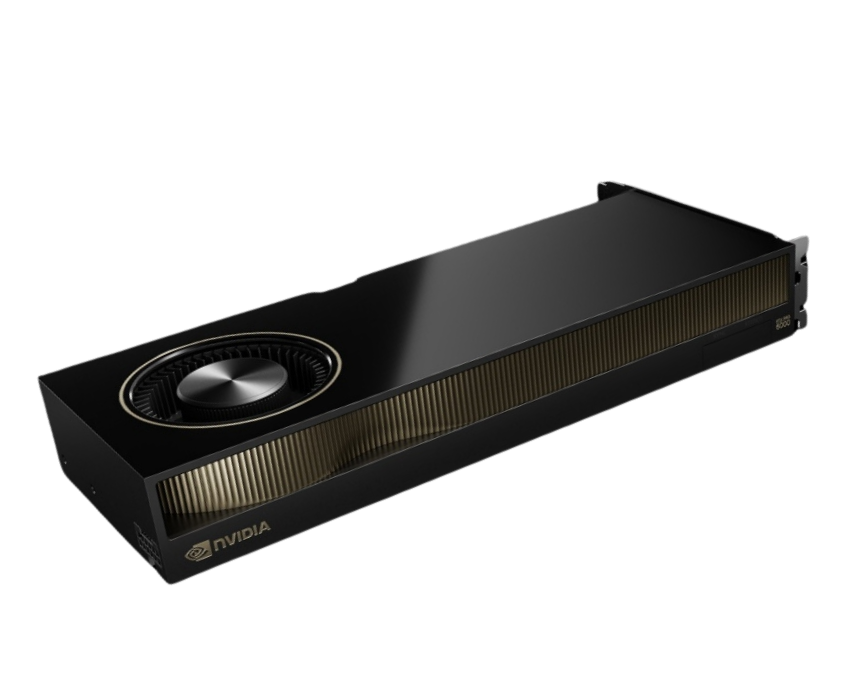

Instant High-End Workstations & Clusters

Spin up RTX PRO 6000 nodes for real-time design, simulation, AI model training or production-rendering in minutes.

The NVIDIA RTX Pro 6000 is engineered for professionals who need extreme performance across AI, engineering simulations, 3D design, and high-end content creation. With powerful compute capabilities and massive 96GB VRAM, it effortlessly handles ultra-complex models, large datasets, real-time rendering,

96 GB GDDR7 with ECC

24,064 (approx)

752 (5th generation)

1,792 GB/s

1,792 GB/s

Enterprise-grade compute, massive memory, and rock-solid infrastructure — without the DevOps drag.

Spin up RTX PRO 6000 nodes for real-time design, simulation, AI model training or production-rendering in minutes.

With 96 GB VRAM and ultra-high bandwidth, huge scenes, digital twins, multi-camera footage or large AI datasets stream without bottlenecks.

Dedicated nodes, role-based access, full encryption, compliance (ISO, SOC…), and monitoring built in — letting creative, engineering and data teams work confidently.

When your workflow demands the top tier of GPU memory, computational performance and visualization fidelity — the NVIDIA RTX PRO 6000 is the choice. Enterprises, studios and research teams choose inhosted.ai because it delivers workstation-class graphics, AI compute and massive memory across the cloud, with enterprise SLA, global reach and transparent billing.

Merge real-time rendering, simulation, and AI inference on one platform. RTX PRO 6000 handles ray tracing, physics simulation, and large model fine-tuning in one cluster.

96 GB GDDR7 ensures even the largest datasets or visual assets fit without memory swaps. Ideal for VFX, architecture, digital twins, and large-scale AI deployment.

Deployments run in certified data-centres, with isolation, encryption, audit logging, and high availability. Critical when workloads are mission-critical.

Launch RTX PRO 6000 clusters in multiple regions with autoscaling, monitoring, and transparent usage billing. No guesswork — scale when needed, shrink when done.

Built on the latest NVIDIA Blackwell architecture, the RTX PRO 6000 delivers next-gen Tensor and RT cores, ultra-high memory bandwidth and massive VRAM headroom. With 96 GB of GDDR7 memory, 24,064 CUDA cores, and up to ~1,792 GB/s bandwidth, this GPU is engineered for teams that require studio-quality rendering, real-time simulation, and enterprise-scale AI deployment — all in one cluster.

No middlemen. No shared footprints. End-to-end control of power, cooling, networking and security—so your AI workloads run faster, safer, and more predictably.

The RTX PRO 6000 accelerates both visualization and AI workflows. Whether you’re rendering film-quality frames, running digital twin simulations or fine-tuning large models — this GPU delivers the throughput and headroom to power production at scale.

Faster ray-traced visual rendering vs previous generation pro GPUs

Higher AI inference/fine-tuning throughput for vision, language and multi-modal models

Massive VRAM for complex scenes, multi-camera workflows, large datasets

Uptime on Inhosted.ai GPU cloud

From real-time collaborative design to AI-infused visualization and simulation — where the RTX PRO 6000 enables breakthrough execution.

Render element-quality frames, visual effects, and large-format output with hardware RT cores and massive memory headroom.

Engineers and designers iterate live on large models, complex physics and digital twin environments — thanks to memory and compute scale.

Fine-tune models, generate scenes, apply AI denoising and upscaling — all within one GPU cluster built for both graphics and intelligence.

Though not purely an AI-training GPU like some specialized models, the RTX PRO 6000 offers massive memory and compute for model prototyping, simulation, and inference – especially when visuals matter.

Process 4K/8K video batches, apply AI workflows for upscaling/denoising, deliver high-throughput media pipelines without latency or quality trade-off.

Handle mega-models, live rendering, VR/AR review sessions and collaborative workflows across teams and regions.

At inhosted.ai, we empower AI-driven businesses with enterprise-grade GPU infrastructure. From GenAI startups to Fortune 500 labs, our customers rely on us for consistent performance, scalability, and round-the-clock reliability. Here's what they say about working with us.

Join Our GPU Cloud

“Switching to inhosted.ai’s RTX PRO 6000 cluster was a game-changer for our studio. Our rendering time halved, and now we can interact with high-res scenes in real-time across teams.”

“The RTX PRO 6000 GPUs from inhosted.ai provide excellent performance. Our CAD and BIM teams now run simulations seamlessly, with real-time ray tracing and NVLink support — our reviews are faster than ever.”

“Our team switched to RTX PRO 6000 for digital signage, and now our real-time AI inference pipelines are much more efficient. The power of RTX at this price point is unmatched.”

“Thanks to inhosted.ai, our content creation team now benefits from real-time rendering with RTX PRO 6000. The performance consistency and the support we’ve received make it feel like a local supercomputer.”

“Deploying RTX PRO 6000 for our rendering needs was the right choice. Scaling was smooth, and the reliability made it easy for us to run high-demand AI tasks without worrying about performance.”

“We’ve been using RTX PRO 6000 for video AI and CAD rendering. The latency is low, and memory bandwidth helps us tackle complex models without a hitch. A great investment.”

“Thanks to inhosted.ai, our content creation team now benefits from real-time rendering with RTX PRO 6000. The performance consistency and the support we’ve received make it feel like a local supercomputer.”

“Deploying RTX PRO 6000 for our rendering needs was the right choice. Scaling was smooth, and the reliability made it easy for us to run high-demand AI tasks without worrying about performance.”

“We’ve been using RTX PRO 6000 for video AI and CAD rendering. The latency is low, and memory bandwidth helps us tackle complex models without a hitch. A great investment.”

“Switching to inhosted.ai’s RTX PRO 6000 cluster was a game-changer for our studio. Our rendering time halved, and now we can interact with high-res scenes in real-time across teams.”

“The RTX PRO 6000 GPUs from inhosted.ai provide excellent performance. Our CAD and BIM teams now run simulations seamlessly, with real-time ray tracing and NVLink support — our reviews are faster than ever.”

“Our team switched to RTX PRO 6000 for digital signage, and now our real-time AI inference pipelines are much more efficient. The power of RTX at this price point is unmatched.”

The RTX PRO 6000 is NVIDIA’s top-tier professional GPU built on the Blackwell architecture with 96 GB GDDR7 memory, massive compute and visualization capability. It’s meant for professionals who combine high-end graphics, simulation, and AI model workflows — studios, engineering firms, research labs and enterprises running real-time visualization plus compute.

The RTX PRO 6000 elevates the professional grade — it offers significantly more VRAM (96 GB vs 48 GB), higher memory bandwidth, and next-gen RT/Tensor cores for both AI and visualization. While the A6000 handles many pro tasks, the PRO model is for the largest datasets and most advanced workflows. L40S leans more toward AI + graphics at scale but doesn’t reach this memory/compute headroom.

Yes — absolutely. With massive memory and next-gen Tensor cores, it handles AI inference, fine-tuning, and large model workloads very well. Although dedicated AI-training GPUs may still be more cost-efficient for pure HPC/LLM scale, the PRO 6000 shines when you need both visualization and AI in one platform.

Creative media and VFX studios, architectural & engineering firms, simulation & digital twin developers, AI research and prototyping labs — all benefit. The GPU is best where large models, real-time visualization, collaborative workflows and AI analytics intersect.

Very scalable. inhosted.ai supports multi-GPU clusters, regional deployment, autoscaling, and resource tracking. You can deploy a handful of nodes for workstation use or dozens for render farms or AI pipelines — all with transparency.

Deployments are in ISO 27001 and SOC-certified infrastructures, with full encryption, tenant isolation, audit logs, and performance SLA (99.95%). Perfect for enterprise-grade workflows where assets, IP and data are critical.

Because you get premium GPU hardware without the hassle of managing infrastructure. Rapid deployment, global reach, unified GPU + AI + graphics, transparent billing — your team focuses on production, not infrastructure.