Accelerate AI and Creative Workflows

Transform your data pipeline and creative process with Tensor and RT Cores that handle AI training, real-time rendering, and simulation simultaneously. Perfect for LLM inference and generative AI content.

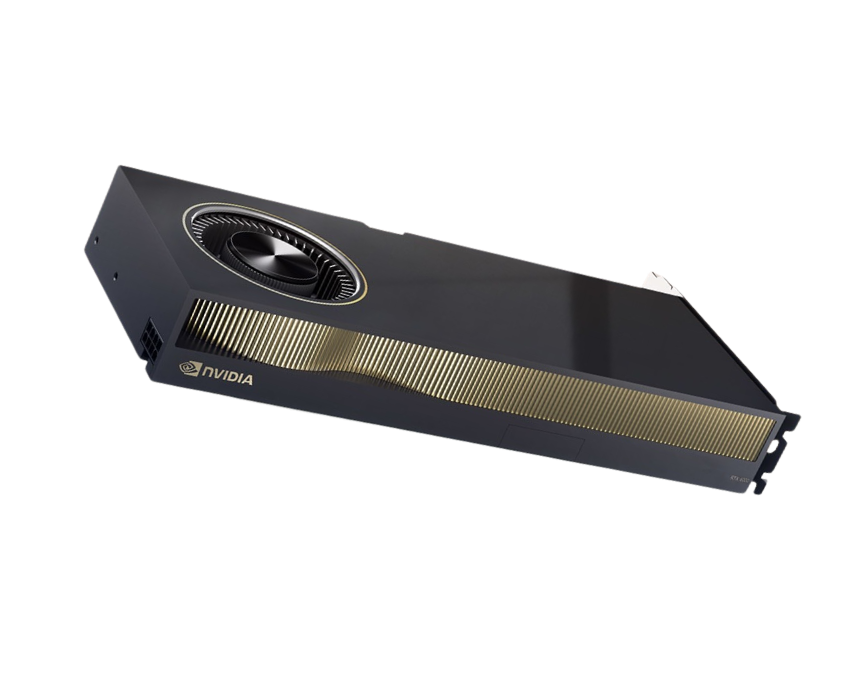

The NVIDIA RTX 6000 Ada brings next-generation GPU performance to AI, 3D rendering, and complex compute workloads, delivering exceptional speed, efficiency, and visual quality. Powered by the Ada Lovelace architecture, it accelerates model training, real-time graphics, digital twins, simulations, and high-end content creation with superior stability.

48 GB GDDR6 with ECC

Up to 13200 TFLOPS

Up to 91 TFLOPS

960 GB/s

300W

Harness unprecedented compute power for AI, graphics, and enterprise-class innovation — without DevOps overhead.

Transform your data pipeline and creative process with Tensor and RT Cores that handle AI training, real-time rendering, and simulation simultaneously. Perfect for LLM inference and generative AI content.

The 48 GB GDDR6 VRAM with ECC ensures you can run complex scenes, multi-camera renders, and large AI datasets without lag or bottlenecks — boosting productivity and model accuracy.

All RTX 6000 Ada nodes on inhosted.ai run in ISO 27001, SOC and PCI DSS-compliant data centers with end-to-end encryption, ensuring secure collaboration for AI and 3D teams.

The NVIDIA RTX 6000 Ada delivers the ultimate balance of AI, graphics, and simulation performance. Enterprises and studios worldwide choose inhosted.ai to leverage this GPU for next-gen digital twin projects, generative AI apps, and photoreal visualization at scale.

Designed for multi-purpose workloads — the RTX 6000 Ada runs AI models, renders scenes, and powers Omniverse pipelines all at once with incredible throughput and efficiency.

Handle massive AI models, simulation datasets, and high-resolution scenes seamlessly. Memory bandwidth of 960 GB/s keeps visual and compute tasks fluid and responsive.

With 4th-Gen Tensor Cores and 3rd-Gen RT Cores, the RTX 6000 Ada delivers unprecedented AI inference and visual compute performance for mission-critical operations.

Deploy and scale your RTX 6000 Ada clusters in multiple geographies with 99.95 % uptime and latency-optimized edge regions — ideal for collaborative and distributed teams.

Powered by the Ada Lovelace architecture, the NVIDIA RTX 6000 Ada delivers next-generation Tensor and RT core performance for AI inference, simulation, and real-time graphics. With 48 GB of ECC memory, NVLink scaling, and hardware AV1 encoding, it’s engineered for AI-enhanced visualization, product design, and digital twin environments that demand both accuracy and speed.

No middlemen. No shared footprints. End-to-end control of power, cooling, networking and security—so your AI workloads run faster, safer, and more predictably.

The RTX 6000 Ada outpaces previous generations with massive throughput gains in AI inference, ray tracing, and rendering — making it the go-to GPU for enterprises blending AI and creative visualization.

Faster ray tracing vs. previous RTX A6000 generation

Higher AI training throughput for vision and language models

VRAM for high-fidelity rendering and simulation scenes

Cloud uptime on inhosted.ai GPU infrastructure

Where the RTX 6000 Ada bridges AI, graphics, and simulation to drive true enterprise innovation.

Run AI assistants, RAG systems, and diffusion models at scale with Tensor Core acceleration and FP8 precision. Ideal for AI studios and enterprises building visual and text AI tools.

Power architectural renders, BIM models, and simulation workflows with AI acceleration and ray-traced realism for true real-time feedback loops.

Real-time ray tracing and hardware denoising deliver cinema-grade renders in a fraction of the time. Perfect for film, architecture, and product visualization studios.

Accelerate simulation and modeling for manufacturing and smart-infrastructure projects with Ada-based AI physics and multi-GPU NVLink scaling.

Collaborate on virtual worlds and product design in real time. RTX 6000 Ada supports Omniverse platform tools for shared workspaces and interactive review.

Leverage the GPU for AI image/video analysis, object detection, and media generation. FP8 Tensor throughput enables low-latency processing for vision-based AI.

At inhosted.ai, we empower AI-driven businesses with enterprise-grade GPU infrastructure. From GenAI startups to Fortune 500 labs, our customers rely on us for consistent performance, scalability, and round-the-clock reliability. Here's what they say about working with us.

Join Our GPU Cloud

“Switching to inhosted.ai’s RTX 6000 Ada nodes cut our render times by over 70%. Complex scenes that used to stall are now interactive — a massive upgrade for our architectural visualization team.”

“The combination of AI and visualization on RTX 6000 Ada is perfect for our automotive design workflows. Real-time rendering, faster simulation, and smooth collaboration across teams — exactly what we needed for production-level innovation.”

“The RTX 6000 Ada has truly transformed our rendering and AI workloads. The scalability and performance are unmatched, enabling us to meet our client’s needs faster and with higher quality.”

“inhosted.ai’s RTX 6000 Ada nodes deliver excellent throughput for our enterprise AI inference systems. The billing is transparent, uptime solid, and scaling from 4 GPUs to 32 was completely effortless. We can finally run concurrent inference jobs without worrying about performance drops.”

“Deploying RTX 6000 Ada GPUs through inhosted.ai helped us merge content creation and AI workflows under one roof. The flexible deployment options and consistent latency made our entire media production pipeline more predictable and efficient.”

“The RTX 6000 Ada cluster gives us everything — speed, efficiency, and visual quality. Our AI-powered product rendering now completes 4× faster, and their 24×7 support ensures uptime stays rock solid even during large-scale inference runs.”

“inhosted.ai’s RTX 6000 Ada nodes deliver excellent throughput for our enterprise AI inference systems. The billing is transparent, uptime solid, and scaling from 4 GPUs to 32 was completely effortless. We can finally run concurrent inference jobs without worrying about performance drops.”

“Deploying RTX 6000 Ada GPUs through inhosted.ai helped us merge content creation and AI workflows under one roof. The flexible deployment options and consistent latency made our entire media production pipeline more predictable and efficient.”

“The RTX 6000 Ada cluster gives us everything — speed, efficiency, and visual quality. Our AI-powered product rendering now completes 4× faster, and their 24×7 support ensures uptime stays rock solid even during large-scale inference runs.”

“Switching to inhosted.ai’s RTX 6000 Ada nodes cut our render times by over 70%. Complex scenes that used to stall are now interactive — a massive upgrade for our architectural visualization team.”

“The combination of AI and visualization on RTX 6000 Ada is perfect for our automotive design workflows. Real-time rendering, faster simulation, and smooth collaboration across teams — exactly what we needed for production-level innovation.”

“The RTX 6000 Ada has truly transformed our rendering and AI workloads. The scalability and performance are unmatched, enabling us to meet our client’s needs faster and with higher quality.”

The RTX 6000 Ada is a professional-grade GPU engineered for AI acceleration, 3D rendering, simulation, and visual computing. It’s widely used in digital twin projects, LLM inference, film VFX, architecture, and product design.

The RTX 6000 Ada is built on the newer Ada Lovelace architecture with faster Tensor/RT cores, higher bandwidth, and double AI throughput compared to A6000. It bridges L40S’s AI+graphics capabilities with workstation-grade visual fidelity.

Yes. With FP8 and FP16 Tensor performance, it’s excellent for AI training and inference workloads — especially for vision, NLP, and media generation models.

Creative studios, engineering firms, AI labs, and enterprises developing digital twins, simulation, and visual AI applications see the greatest benefit from the Ada architecture’s power and scalability.

Absolutely. Using NVLink and inhosted.ai’s multi-GPU orchestration, you can scale from a single GPU to multi-node clusters for parallel rendering and AI inference without downtime.

All Ada GPUs run on dedicated, isolated cloud nodes with end-to-end encryption, enterprise firewall policies, and 99.95 % uptime — plus real-time GPU monitoring for visibility and control.

Because inhosted.ai combines hardware acceleration with software ease — pre-configured images, auto-scaling, and transparent billing ensure your AI and creative workflows run faster, smarter, and safer.