Launch Training Pipelines Fast

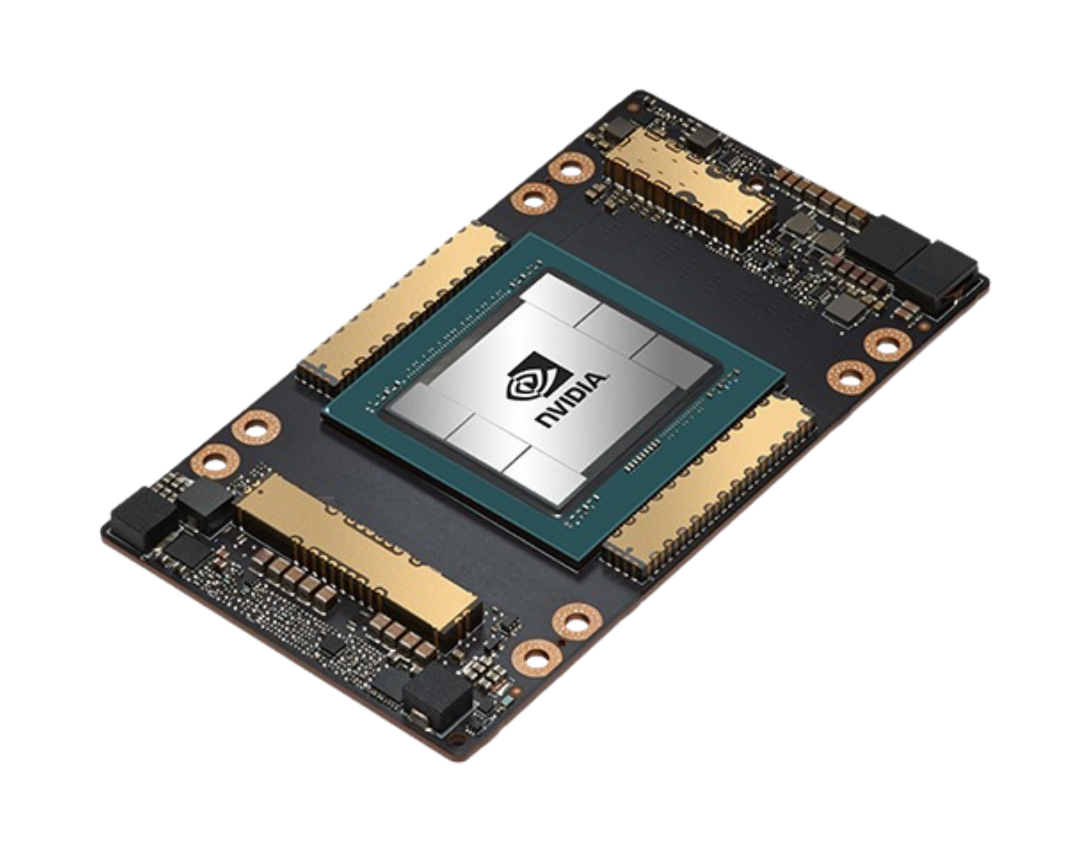

Like A100 processes, spin up A100 clusters with LLMs, recommenders, and multi-modal workloads, auto scaling and spot-aware scheduling, and job templates to replicate experiments, slots per minute at auto scaling theory level, etc