Instant AI Scaling

Run inference, vision, and video workloads simultaneously with dynamic scaling.

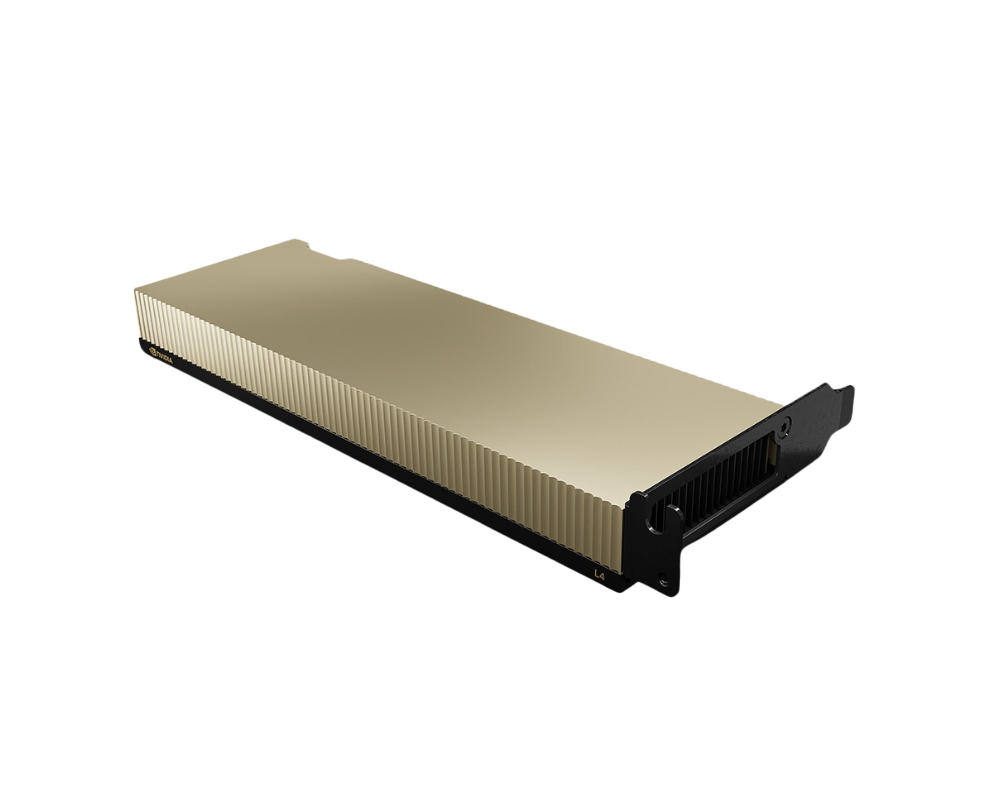

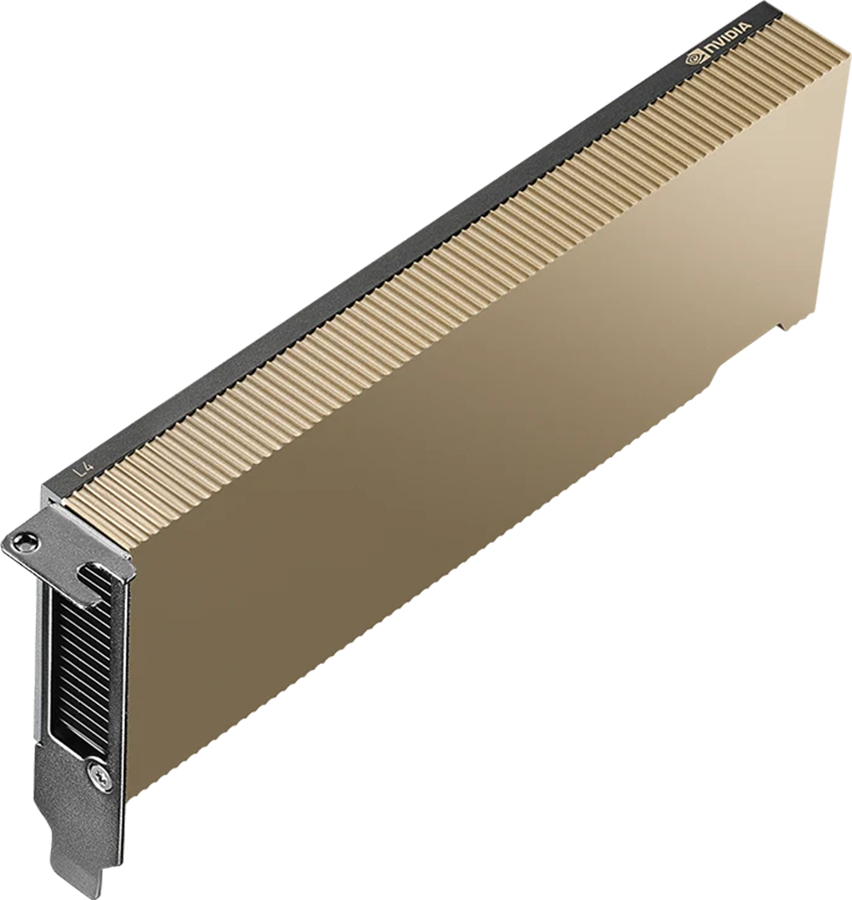

The NVIDIA L4 is a powerful, energy-efficient GPU designed to accelerate modern AI workloads across cloud, enterprise, and edge environments. Ideal for AI inference, image and video processing, content generation, and real-time analytics, the L4 delivers exceptional performance while maintaining a low power profile—making it a smart choice for businesses scaling AI without high infrastructure costs.

24 GB GDDR6

Up to 485 TFLOPS

Up to 30 TFLOPS

300 GB/s

72-80 W

Performance, agility and predictable scale — without the DevOps drag.

Run inference, vision, and video workloads simultaneously with dynamic scaling.

Accelerate 4K/8K encoding, real-time video analytics, and live streaming pipelines.

Lower your carbon footprint with Ada’s optimized power efficiency.

From model training to real-time inference, enterprises trust Inhosted.ai to deliver the raw power of NVIDIA H100 GPUs — optimized for scalability, security, and seamless deployment.

The L4 GPU offers affordable AI performance, perfect for inference, analytics, and automation workloads.

Encode, decode, and transcode multiple video streams with NVIDIA NVENC & NVDEC acceleration.

Compact form factor suitable for both edge AI and large-scale cloud deployments.

Hosted in NetForChoice Tier 3 data centers with predictable pricing and 99.95% uptime.

As AI and video become more pervasive, the demand for efficient, cost effective computing is increasing more than ever. NVIDIA L4 Tensor Core GPUs deliver up to 120X better AI video performance, resulting in up to 99 percent better energy efficiency and lower total cost of ownership compared to traditional CPU-based infrastructure.

No middlemen. No shared footprints. End-to-end control of power, cooling, networking and security—so your AI workloads run faster, safer, and more predictably.

The NVIDIA L4 sets new performance benchmarks in deep learning, accelerating training and inference for today’s most demanding AI and HPC workloads. Experience next-level scalability, power efficiency, and intelligent throughput with Transformer Engine innovation.

Faster AI inference than previous generation T4

Higher media encoding efficiency

Typical power draw for sustainable performance

Always-on availability through inhosted.ai infrastructure

Where the NVIDIAL4 transforms workloads into breakthroughs — from LLM training to scientific computing, accelerating results that redefine performance limits.

Run intelligent workloads such as chatbots, object detection, anomaly recognition, and recommendation systems with ultra-low latency.The L4 GPU’s Tensor Cores deliver lightning-fast inference for computer-vision and NLP models while maintaining cost-efficient scalability for production AI environments.

Process, analyze, and enhance high-resolution video streams in real time. With NVENC/NVDEC acceleration, the L4 enables smooth multi-stream encoding, decoding, and AI-based video enhancement — ideal for surveillance, smart-city cameras, and OTT content delivery networks.

Deploy lightweight AI models directly at the edge — closer to your users, sensors, and devices. L4 GPUs are optimized for low-power, high-throughput inference, perfect for retail analytics, IoT automation, predictive maintenance, and industrial monitoring in remote or distributed environments.

Accelerate real-time 3D visualization, industrial design, and virtual-prototyping using GPU-powered rendering. The L4 GPU enables rapid simulation feedback loops for manufacturing, construction, and smart-infrastructure modeling, cutting design cycles and costs.

Automate media review, image classification, and sensitive-content detection using GPU-accelerated inference pipelines. Organizations in social media, broadcasting, and e-commerce rely on L4 instances to maintain compliance and enhance platform safety at scale.

At inhosted.ai, we empower businesses with cutting-edge GPU infrastructure that powers everything from AI research to real-time applications. Here’s what our customers say about their experience.

Join Our GPU Cloud

“Switching to A30 cut our AI training time by 60% and lowered cost by 30%.”

“We use L4 instances for AI inference at the edge. Performance is incredible for the price.”

“L4 GPUs gave us enterprise-grade video analytics on a startup budget.”

“Inhosted.ai’s L4 cluster deployment took under a minute — amazing support and reliability.”

“Perfect GPU for our digital signage and real-time AI inference workloads.”

“Low power, low cost, high performance — exactly what we needed for distributed AI applications.”

“Inhosted.ai’s L4 cluster deployment took under a minute — amazing support and reliability.”

“Perfect GPU for our digital signage and real-time AI inference workloads.”

“Low power, low cost, high performance — exactly what we needed for distributed AI applications.”

“Switching to A30 cut our AI training time by 60% and lowered cost by 30%.”

“We use L4 instances for AI inference at the edge. Performance is incredible for the price.”

“L4 GPUs gave us enterprise-grade video analytics on a startup budget.”

AI inference, media processing, and edge AI applications needing high efficiency and low power.

It offers a significant boost in AI performance and media acceleration using Ada architecture with 24 GB GDDR6 memory.

Yes, L4 GPUs are optimized for multi-stream encoding and AI-based video enhancement.

Yes. The H100 supports NVLink and NVSwitch, allowing multi-GPU communication with up to 900 GB/s interconnect speed, making it ideal for massive distributed AI training and HPC clusters.

Extremely — consuming only around 80 W while delivering double the performance of the previous generation.

Because we combine Tier 3 data center reliability with transparent pricing and quick deployment in multiple regions.