Deploy in 10s

One-Click Clusters, Auto-Scaling, and Temporary Jobs No queue, no tickets, and just run. Get ready access to an H100 GPU compute that is optimized to operational workloads as well as rapid experimentation.

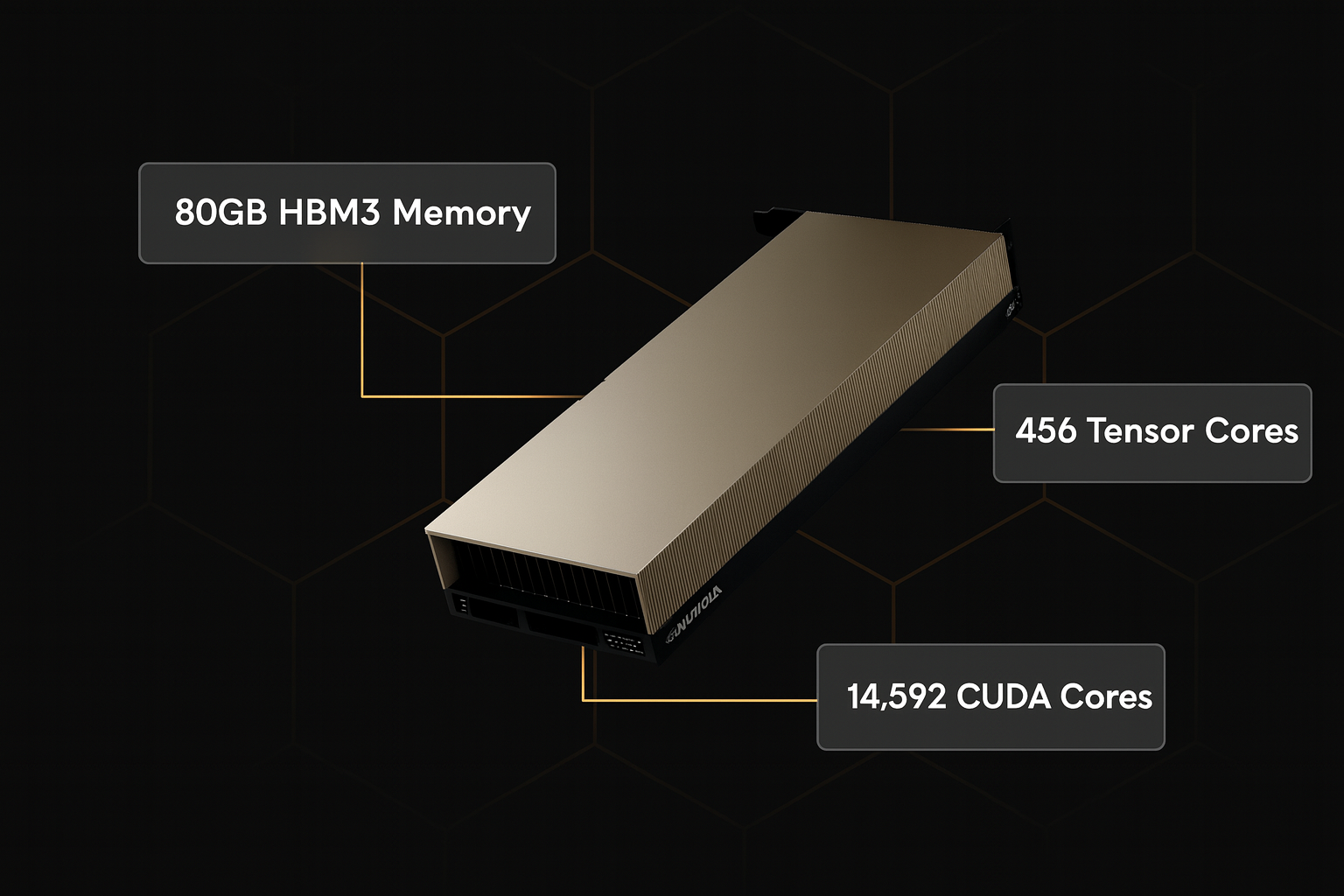

The NVIDIA H100 is being used to reinvent what can be done in the new world of computing. This next-generation GPU is designed with advanced AI workloads, scalability of model training, and enterprise performance to achieve unparalleled speed, scalability, and efficiency. The NVIDIA H100 GPU is used in organizations that cannot afford to slow down and requires power to innovate faster in AI, analytics, and other data intensive applications.

80 GB HBM3 Memory

3958 TFLOPS

67 TFLOPS

3.35 TB/s

900 GB/s Bidirectional

Agility, predictable scale and performance- No DevOps drag. This platform is powered by the NVIDIA H100 to provide next-generation performance to enterprises looking to have the best GPU infrastructure to AI, ML, and other data-intensive workloads.

One-Click Clusters, Auto-Scaling, and Temporary Jobs No queue, no tickets, and just run. Get ready access to an H100 GPU compute that is optimized to operational workloads as well as rapid experimentation.

Distributed IO trained on LLM and inferred on high-QPS with zero hot-spots. Designed to be compatible with NVIDIA GPU for deep learning, which allows effortless data traffic in massive artificial intelligence pipelines.

ISO 20000, 27017, 27018, SOC, PCI DSS - the 99.95% uptime SLA is supported, which is why most organizations use that as the platform to implement the NVIDIA H100 GPU infrastructure at scale.

Since model training through model inference, enterprises rely on Inhosted.ai to provide the raw performance of NVIDIA H100 GPUs which are fine-tuned to scale, security and deployability across current AI workloads.

Reviewing H100 with FP8 Tensor Cores and NVLink fabric, the majority of large-scale machine learning parameters are trained 3 times faster. Train AI on a single one of the best GPUs in the market, scale without latency or bottlenecks, and horizontally.

Optimate generative AI models, diffusion frameworks, or RAG pipelines to refinement. Experience scalable workload balancing that is driven by the NVIDIA H100 GPU which is designed to support large-scale inference and training.

Both GPU nodes operate in an ISO 27001 & SOC certified system -including data isolation, encryption, and framework to comply with the companies transitioning to NVIDIA server GPU.

Have your H100 clusters distributed over several regions of low latency by auto-scaling. 24/7 SLA reliability with 99.95% uptime SLA and routing to ensure high performance across the globe.

Power AI workloads with the latest NVIDIA H100 GPU servers systems with acceleration (FP8/FP16) and ultra-high-speed (HBM3) memory along with NVLink connectivity to enable the use of multiple graphics cards. Smart power and cool mitigate energy consumption and maximize throughput, which is optimal in the evaluation of H100 GPU price vs performance by enterprises.

No middlemen. No shared footprints. End-to-end power, cooling, networking, and security control: Power, cooling, networking, and security control are end-to-end controls that are essential to organizations applying NVIDIA GPU for deep learning and enterprise AI.

The NVIDIA H100 introduces fresh standards in deep learning, training and inference speeds of the most challenging AI and HPC workloads today. Transformer Engine innovation provides next-level scalability, power efficiency, and intelligent throughput, and makes it one of the best NVIDIA graphics cards to use in enterprise AI.

Quickened Large Language Model Training.

GenAI Higher Inference throughput.

Fast-tracked Data Processing and Recommendations.

Better Energy efficiency per gpu node.

Where the NVIDIA H100 changes the workloads into breakthroughs - in LLM training, scientific computing, and accelerating answers that change the performance boundaries.

H100 servers are used to shorten the training time of deep learning by a significant amount for large datasets and complicated architectures. One of the best GPUs for deep learning has teams that converge faster.

Process the volume of events in streams and process them with low latency. H100 throughput facilitates real-time dashboards, anomaly detection, and operational intelligence.

H100 is the top choice NVIDIA server GPU in research institutes because its parallelism enables enormous compute density.

Training of Power LLM, fine-tuning, and large-scale inference. The architecture of H100 enhances the improvement of throughput and the accuracy of translation, classification, RAG pipelines, and conversational AI.

H100 speeds up the comprehension of visuals and images between detection and diffusion. Teams can deploy high-quality generative content using one of the best GPUs for gaming and AI workloads and consistently perform well at scale.

Recommender systems are large and can be trained and served more quickly. H100 enhances embedding processes and prioritization of pipelines, enhanced CTR, retention, and personalization based on real-time intelligence.

At inhosted.ai, we empower AI-driven businesses with enterprise-grade GPU infrastructure. From GenAI startups to Fortune 500 labs, our customers rely on us for consistent performance, scalability, and round-the-clock reliability. Here's what they say about working with us.

Join Our GPU Cloud

“Our transition to inhosted.ai’s H100 GPU servers was smoother than expected. Model training that took 18 hours on A100 now completes in under 6. The support team helped fine-tune the cluster setup — truly enterprise-grade service.”

“We train high-parameter LLMs for enterprise search. The H100 nodes on inhosted.ai deliver consistent throughput and excellent latency. Uptime has been flawless, and scaling from 8 to 64 GPUs was completely seamless.”

“The billing transparency is a breath of fresh air. We always know what we’re paying for. Performance on H100 has been top-tier, and the infrastructure stability gives us peace of mind during production inference.”

“We use H100 GPUs for video generation and diffusion workloads. The low-latency file system and predictable scaling made a huge difference. inhosted.ai feels like it’s built for developers, not just data centers.”

“The combination of H100 performance and inhosted.ai’s monitoring dashboard helped us track GPU utilization in real-time. It’s reliable, flexible, and ideal for both research and production-grade AI.”

“We migrated our deep learning pipelines to inhosted.ai’s H100 clusters, and the difference was night and day. Training times dropped, costs stayed stable, and the support team was extremely proactive throughout deployment.”

“We use H100 GPUs for video generation and diffusion workloads. The low-latency file system and predictable scaling made a huge difference. inhosted.ai feels like it’s built for developers, not just data centers.”

“The combination of H100 performance and inhosted.ai’s monitoring dashboard helped us track GPU utilization in real-time. It’s reliable, flexible, and ideal for both research and production-grade AI.”

“We migrated our deep learning pipelines to inhosted.ai’s H100 clusters, and the difference was night and day. Training times dropped, costs stayed stable, and the support team was extremely proactive throughout deployment.”

“Our transition to inhosted.ai’s H100 GPU servers was smoother than expected. Model training that took 18 hours on A100 now completes in under 6. The support team helped fine-tune the cluster setup — truly enterprise-grade service.”

“We train high-parameter LLMs for enterprise search. The H100 nodes on inhosted.ai deliver consistent throughput and excellent latency. Uptime has been flawless, and scaling from 8 to 64 GPUs was completely seamless.”

“The billing transparency is a breath of fresh air. We always know what we’re paying for. Performance on H100 has been top-tier, and the infrastructure stability gives us peace of mind during production inference.”

NVIDIA H100 GPU is a high-performance accelerator used in AI and deep learning tasks and other workloads related to large-scale computing. It provides modern AI applications and workloads with superior speeds, efficiency and scalability.

The NVIDIA H100 pricing is contingent on configuration, geographical locality and deployment. Cloud accessibility gives the business the opportunity to utilize H100 performance without initial investment on hardware equipment, which makes it a viable and cost efficient.

The prices of NVIDIA H100 cluster depend upon the quantity of GPUs, networking arrangement, and performance necessity. The clusters that are used in AI training or large scale workloads tend to have a number of GPUs that are interconnected using high interconnect speeds.

NVIDIA H100 is costly since it can provide the industry with the best performance in AI and machine learning. It has a high-bandwidth memory, optimized tensor cores and advanced architecture, thus it is perfect with immense workloads that are large and extremely vital.

A GPU cloud offers access to high-performance GPUs on a pay-as-you-drive basis with the aid of a cloud server. It enables users to execute AI models and deep learning, as well as computation-intensive programs using physical equipment remotely.

A cloud server is a virtual server which operates on a cloud system and offers a scalable computing capacity in terms of CPU, GPU, memory and storage. It enables companies to scale infrastructure within a short period of time.

The NVIDIA GPUs are high-performance and require less time to train and have improved AI framework support. Deep learning, data analytics, and high-performance computing are some of the popular activities that use them because of their reliability and scalability.