Quick Setup, Faster Results

Create RTX 8000 environments within minutes in the case of design applications, rendering applications, and AI applications. No long set up time--go to work.

RX 8000 is targeted at professionals that require serious performance in AI, 3D design, simulation and visualization. It provides high computing power, high memory, and consistent performance; hence it is suited to a team that will work on challenging, and demanding projects. Under the NVidia RTX, artists and businesses are able to work quicker, smoother and with total confidence.

48 GB GDDR6 with ECC

Up to 130+ TFLOPS

~16.3 TFLOPS

672 GB/s

Up to 100 GB/s bidirectional (2-way NVLink)

The RTX 8000 assists the teams to work at a higher speed without concerns about the infrastructure constraints. It offers some sort of consistency in performance and reliability whether you are rendering, training models or even visualizing data.

Create RTX 8000 environments within minutes in the case of design applications, rendering applications, and AI applications. No long set up time--go to work.

Having a large memory capacity and secure access control systems, teams are able to deal with big files and work freely across departments.

Secure storage, role-based access and monitoring features assist in ensuring data security and allowing the company to keep working efficiently.

Firms prefer Inhosted.ai since it provides high-quality RTX 8000 performance at a clear cloud server pricing and is affordable as it is enterprise-quality stable. It is designed to suit companies that desire performance but not complexity.

RTX 8000 can easily tackle ray tracing, AI-enhanced rendering, and complex scenes. – assisting teams in creating superior images at a faster rate.

The RTX 8000 is capable of supporting high workloads based on CUDA, TensorRT, and latest NVIDIA technologies, in AI training, video rendering, or both.

Your data will be secured using secure controls and storage is encrypted as well as in isolated environments.

The resources can be easily scaled up or down and executed to align with your teams in order to perform and collaborate better.

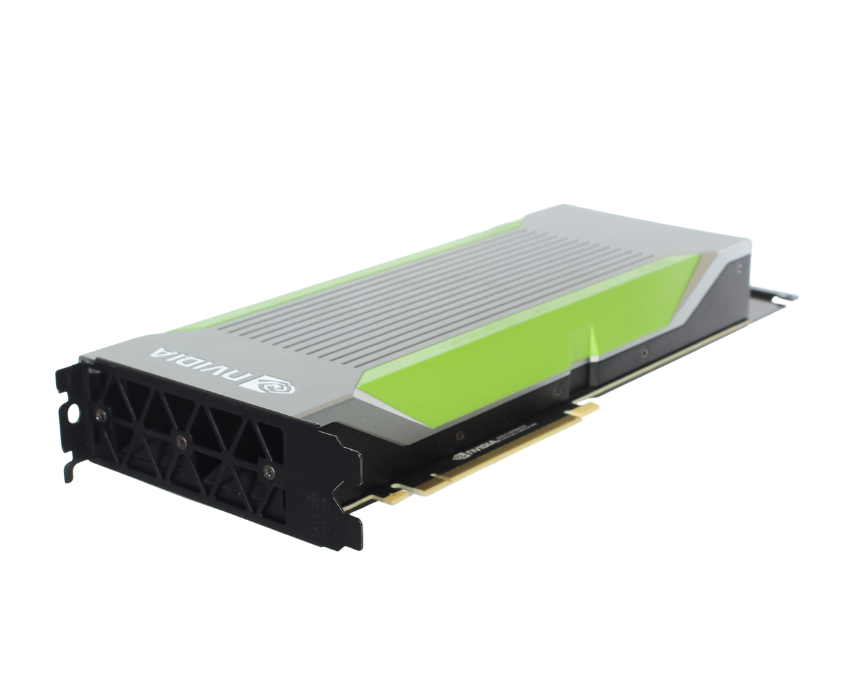

NVIDIA Quadro RTX 8000 boasts of 48 GB high-speed memory and RT and Tensor Cores. It has a higher level of stability, accuracy, and reliability compared to the usual NVIDIA GeForce RTX cards in professional use.

It is also a good option compared to NVIDIA server GPU product, particularly when a team requires consistent performance when executing protracted tasks.

No middlemen. No shared footprints. End-to-end control of power, cooling, networking and security—so your AI workloads run faster, safer, and more predictably.

The NVIDIA RTX 8000 delivers feature-quality ray tracing and AI-enhanced graphics at scale. Teams experience faster time-to-final, smoother collaboration, and consistent throughput for demanding render and review cycles — all with cloud elasticity.

Faster ray-traced rendering vs prior gen in many DCC pipelines

Higher AI denoise/super-resolution throughput with Tensor Cores

Large-scene memory (up to 96 GB via NVLink)

Uptime on Inhosted.ai GPU cloud

Where the NVIDIA RTX 8000 turns creative and engineering workloads into breakthroughs — from ray-traced film frames to interactive digital twins and enterprise visualization.

Produce large and quality pictures, animation, and simulation without decreased performance. RTX 8000 is designed to support the complex scenes and thus works well in design, VFX and visualization processes.

Design and work in CAD, BIM and 3D design software in real-time. The teams are able to review, edit and collaborate with faster speed without delays and interruptions.

Construct the correct architecture, manufacturing, and smart infrastructure digital twins. The RTX 8000 provides the performance that provides high-quality simulation of real-world behaviour.

Produce high-quality and stable frame-rate virtual and augmented reality experiences with rich visuals and graphics, as well as, training, design reviews and interactive spaces.

Create, refine, and edit high-resolution video with ease as well as running AI workloads. With upscaling to analytics, performance remains constant as far up as one goes.

Expert computer vision models with high accuracy. Detection, tracking, and analysis can be effectively undertaken by the RTX 8000, therefore, it is also powerful as the cheapest GPU cloud providers without dropping in performance.

At inhosted.ai, we empower AI-driven businesses with enterprise-grade GPU infrastructure. From GenAI startups to Fortune 500 labs, our customers rely on us for consistent performance, scalability, and round-the-clock reliability. Here's what they say about working with us.

Join Our GPU Cloud

“Switching to inhosted.ai’s RTX 8000 nodes cut our shot turnarounds by more than half. AI denoising runs in-line, and frame times are finally predictable across shows.”

“The Ada generation made a visible difference in our AI content production. We’re generating text-to-video and visual assets faster than ever before with stable latency.”

“RTX 8000 is the right fit for our CAD/BIM team. Real-time ray tracing plus NVLink for large assemblies makes design reviews feel instant, and everyone can collaborate without stalls.”

“Our video AI pipeline uses super-resolution and denoise passes before delivery. On RTX 8000 we batch multiple streams reliably and hit deadlines without spinning extra capacity.”

“Security was our top requirement. Inhosted.ai’s isolation and audit controls let us host pre-release assets safely while still scaling renders during crunch.”

“The pricing is transparent and the uptime solid. We spin up RTX 8000s for crunch weeks, keep a small baseline otherwise, and the billing maps exactly to usage.”

“Our video AI pipeline uses super-resolution and denoise passes before delivery. On RTX 8000 we batch multiple streams reliably and hit deadlines without spinning extra capacity.”

“Security was our top requirement. Inhosted.ai’s isolation and audit controls let us host pre-release assets safely while still scaling renders during crunch.”

“The pricing is transparent and the uptime solid. We spin up RTX 8000s for crunch weeks, keep a small baseline otherwise, and the billing maps exactly to usage.”

“Switching to inhosted.ai’s RTX 8000 nodes cut our shot turnarounds by more than half. AI denoising runs in-line, and frame times are finally predictable across shows.”

“The Ada generation made a visible difference in our AI content production. We’re generating text-to-video and visual assets faster than ever before with stable latency.”

“RTX 8000 is the right fit for our CAD/BIM team. Real-time ray tracing plus NVLink for large assemblies makes design reviews feel instant, and everyone can collaborate without stalls.”

Yes, the RTX 8000 actually exists, and it is a business-level graphic card that NVIDIA created. It is intended to serve the workloads of high-end use, like AI development, 3D rendering, simulation, and advanced visualization systems of professionals and enterprises.

The price of RTX 8000 in India will be determined by variables such as availability, supplier, system setup and deployment model. Most companies also like the flexibility rather than the initial cost of purchasing hardware and would like to access the RTX 8000 via cloud platform or data center as it is more flexible and costs less to start up.

NVIDIA released the NVIDIA RTX 8000 in 2018 based on the professional Quadro RTX series. It provided accelerated ray-tracing and AI in business and workstations.

RTX 8000 is provided with 48 GB GDDR6 VRAM and NV Link can be deployed as well, with a potential total of 96 GB of memory capacity. This is suitable in large AI models, complicated simulations, and high-resolution rendering.

NVIDIA RTX GPUs are superior compared to GTX GPUs. RTX cards have dedicated RT Cores and Tensor Cores, which is more suitable in the AI workload and rendering and professional applications. GTX cards are primarily intended to be used in general graphics and games.

NVIDIA GeForce RTX is a consumer-oriented series of GPUs that are aimed at the gaming and content creation sector, as well as at entry-level creative tasks. Although it can work with large workloads, it is not aimed at the enterprise scale as the RTX 8000 is designed to be reliable and be indicative of sustained performance.

Indeed, the RTX 6000 belongs to the NVIDIA professional line of GPUs. It has good performance on creative and engineering activities, whereas the RTX 8000 has greater memory bandwidth and is favorable towards the heavy enterprise and AI applications.