Train GenAI Faster

Optimize LLMs, diffusion, and creative models.

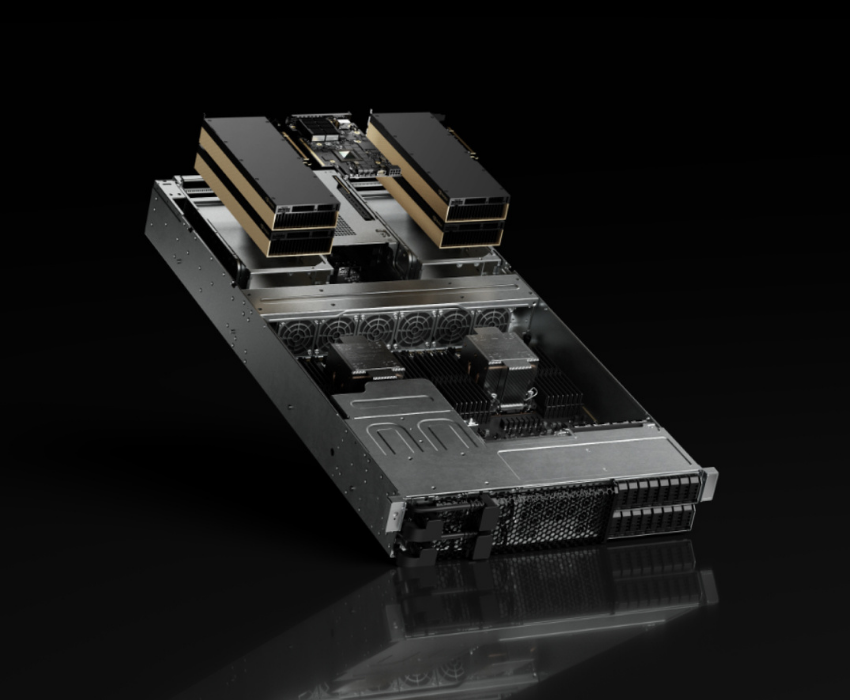

NVIDIA L40S GPUs deliver an exceptional balance of AI compute, graphics rendering, and inference acceleration—making them a powerful choice for GenAI, computer vision, 3D workloads, and enterprise AI deployments. Built on the Ada Lovelace architecture, the L40S combines high-speed tensor performance, advanced ray-tracing cores, and efficient power usage to handle multimodal AI

48 GB GDDR6 with ECC

733 TFLOPS

90 TFLOPS

864 GB/s

350 W

Performance, agility and predictable scale — without the DevOps drag.

Optimize LLMs, diffusion, and creative models.

Accelerate rendering and simulation.

Fully supports Omniverse, TensorRT, and CUDA 12.

From creative workloads to enterprise-scale AI inference, businesses trust Inhosted.ai to deliver the performance and scalability of NVIDIA L40S GPUs — optimized for accelerated compute, advanced visualization, and cloud-native deployment.

Run demanding AI inference, 3D graphics, and generative workloads with lightning speed. The L40S GPU combines Tensor, RT, and CUDA cores to deliver breakthrough acceleration for training, rendering, and real-time AI applications.

Fine-tune diffusion, text-to-image, and video generation models with precision. The L40S is purpose-built for creative AI pipelines, offering superior FP8 Tensor Core throughput and efficient scaling for RAG systems workflows.

Every L40S deployment on Inhosted.ai runs in ISO 27001, SOC , and PCI DSS–compliant environments. Our infrastructure ensures data isolation, encryption, and secure orchestration, providing peace of mind for visualization workloads.

Deploy your L40S-powered clusters across multiple low-latency regions with automated scaling and redundancy. Our cloud platform delivers 99.95% uptime, ensuring consistent performance for enterprise visualization from anywhere .

Run next-generation AI, rendering, and visualization workloads with NVIDIA L40S GPUs — delivering breakthrough performance with 4th-Gen Tensor Cores, RT Cores, and 48 GB GDDR6 memory. Designed for data centers, creative studios, and enterprise AI platforms, the L40S combines accelerated compute, real-time graphics, and AI inferencing into one versatile powerhouse.

No middlemen. No shared footprints. End-to-end control of power, cooling, networking and security—so your AI workloads run faster, safer, and more predictably.

The NVIDIA H100 sets new performance benchmarks in deep learning, accelerating training and inference for today’s most demanding AI and HPC workloads. Experience next-level scalability, power efficiency, and intelligent throughput with Transformer Engine innovation.

AI Creation Stack

Better inference for GenAI and diffusion

Memory for massive datasets

Uptime on Inhosted.ai GPU cloud

Where the NVIDIA L40S transforms workloads into breakthroughs — from generative AI to 3D rendering, accelerating performance across AI, visualization, and compute-driven industries.

L40S GPUs deliver exceptional performance for AI and deep learning model training, offering efficient mixed-precision compute for a range of frameworks. They handle large datasets, enabling faster iteration and more accurate results — ideal for developing multimodal, generative, and vision-based models with optimized resource utilization.

Accelerate data visualization and AI-driven analytics with low latency and high throughput. The L40S enables organizations to process, analyze, and visualize massive datasets in real time, powering dashboards, predictive systems, and AI-powered business intelligence for faster, smarter decision-making.

Designed for simulation, engineering, and scientific workloads, the L40S combines CUDA, RT, and Tensor Cores for compute-intensive applications. Its scalable parallelism supports advanced simulations, 3D design, and rendering workflows with excellent energy efficiency and consistent performance.

The L40S accelerates NLP workloads including language modeling, summarization, and RAG-based pipelines. With advanced Tensor Cores and optimized inference performance, it enables real-time interaction for chatbots, multilingual translation, and voice AI—all with reduced latency and lower operational cost.

From image synthesis and video rendering to AI-driven visual content creation, the L40S delivers cutting-edge GPU acceleration. Its advanced Tensor and RT Cores support diffusion models, visual effects, and content pipelines, making it perfect for enterprises building next-gen creative and generative AI tools.

Power recommendation engines and personalization platforms with precision. The L40S optimizes large-scale embeddings and vector search for content ranking, product recommendations, and targeted advertising, delivering real-time user personalization while maintaining performance stability across workloads.

At inhosted.ai, we empower AI-driven businesses with enterprise-grade GPU infrastructure. From GenAI startups to Fortune 500 labs, our customers rely on us for consistent performance, scalability, and round-the-clock reliability. Here's what they say about working with us.

Join Our GPU Cloud

“Our transition to inhosted.ai’s L40S GPUs was game-changing for our rendering studio. Scenes that used to take hours now render in minutes — without sacrificing quality. The transition was seamless, and their team optimized our cluster to balance creative and AI workloads effortlessly.”

“We manage large-scale video intelligence pipelines, and the L40S’s speed is unreal. Processing latency dropped by 40%, and our batch jobs now finish overnight instead of over the weekend. The cluster orchestration is seamless.”

“The new L40S GPUs are simply faster, cooler, and smarter. Power efficiency is outstanding — we’re getting higher performance per watt than any setup we’ve used before. And with inhosted.ai’s uptime, it feels like a local supercomputer.”

“We’re in biotech simulation — high-performance computing is our lifeline. The L40S nodes cut simulation runtimes by nearly 60%. Even during heavy parallel workloads, stability and bandwidth remained flawless.”

“Our NLP division trains multi-lingual LLMs, and the L40S GPUs handled massive datasets effortlessly. The scaling flexibility, combined with predictable billing, made it easy for us to ramp up without worrying about runaway costs.”

“We’ve used multiple cloud GPU providers — none come close to the performance consistency we get with inhosted.ai’s L40S instances. Our recommendation models hit record inference speeds, and customer response times improved instantly.”

“We’re in biotech simulation — high-performance computing is our lifeline. The L40S nodes cut simulation runtimes by nearly 60%. Even during heavy parallel workloads, stability and bandwidth remained flawless.”

“Our NLP division trains multi-lingual LLMs, and the L40S GPUs handled massive datasets effortlessly. The scaling flexibility, combined with predictable billing, made it easy for us to ramp up without worrying about runaway costs.”

“We’ve used multiple cloud GPU providers — none come close to the performance consistency we get with inhosted.ai’s L40S instances. Our recommendation models hit record inference speeds, and customer response times improved instantly.”

“Our transition to inhosted.ai’s L40S GPUs was game-changing for our rendering studio. Scenes that used to take hours now render in minutes — without sacrificing quality. The transition was seamless, and their team optimized our cluster to balance creative and AI workloads effortlessly.”

“We manage large-scale video intelligence pipelines, and the L40S’s speed is unreal. Processing latency dropped by 40%, and our batch jobs now finish overnight instead of over the weekend. The cluster orchestration is seamless.”

“The new L40S GPUs are simply faster, cooler, and smarter. Power efficiency is outstanding — we’re getting higher performance per watt than any setup we’ve used before. And with inhosted.ai’s uptime, it feels like a local supercomputer.”

The NVIDIA L40S GPU is a next-generation data-center GPU built on Ada Lovelace architecture, designed to unify AI acceleration, rendering, and graphics workloads. Unlike traditional GPUs that focus on either compute or visualization, the L40S bridges both worlds — combining Tensor Cores for AI, RT Cores for real-time ray tracing, and 48 GB GDDR6 memory for massive data throughput. This makes it ideal for generative AI, 3D design, visualization, and content creation under a single high-efficiency platform.

L40S GPUs excel in AI inference, computer vision, digital twin simulation, and graphics rendering. Businesses building generative AI applications, chatbots, or media-rich AI tools gain faster performance with reduced latency. Creative industries such as architecture, gaming, product design, and film VFX can leverage the GPU’s real-time ray tracing and encoding capabilities for faster visualization and rendering — all while maintaining top-tier energy efficiency and stability.

While the H100 GPU dominates large-scale AI training and HPC, the L40S offers a balanced performance profile optimized for AI inference, rendering, and visualization. It delivers up to 1.2× higher AI inference performance compared to the previous A40, while consuming less power and offering broader workload flexibility. For businesses that need AI plus graphics acceleration without the extreme cost of HPC-class GPUs, L40S provides the sweet spot of speed, scalability, and cost efficiency.

Absolutely. The L40S GPU supports Tensor Cores that accelerate FP8/FP16 matrix operations critical for generative AI, LLMs, and diffusion models. Many enterprises use L40S clusters for fine-tuning LLMs, running inference pipelines, or deploying custom AI assistants at scale. With its NVLink and PCIe Gen4 connectivity, data exchange between GPUs remains fast and efficient, allowing consistent throughput for AI workloads that demand real-time responsiveness.

The L40S GPU is built for scalability. It supports multi-GPU topologies via NVLink, enabling seamless data flow between GPUs for large-scale AI or render tasks. When deployed through inhosted.ai infrastructure, scaling from 4 to 32 GPUs can be done dynamically without downtime. This makes it a great choice for enterprises that require elastic AI clusters or hybrid deployments where both cloud and on-prem resources need to work together smoothly.

The L40S GPU empowers multiple industries — from media and entertainment, where real-time rendering and video AI are crucial, to automotive, where simulation and design visualization demand precision. Manufacturing firms use L40S for digital twins and predictive maintenance AI, while healthcare and research organizations leverage it for imaging and machine-learning-based data analysis. Its versatility bridges the gap between creative and compute-intensive industries seeking cost-effective GPU acceleration.

inhosted.ai provides enterprise-grade L40S GPU clusters backed by ISO 27001 and SOC-certified data centers. Each node is optimized for AI, render, and simulation workloads with dedicated network bandwidth, 99.95 % uptime SLA, and real-time GPU monitoring dashboards. Businesses gain the flexibility to scale GPU resources on demand while maintaining data isolation, encryption, and regulatory compliance — ideal for secure enterprise AI operations.