With the increase in data sizes and more complex AI models, this issue affects most teams, as the conventional infrastructure could not keep up anymore. The training models are exceedingly time-intensive, the analytics channels are sluggish, and the expenses of the infrastructure are difficult to predict. It is at this point that a selection of cloud server architecture becomes important.

Through startups, enterprises, and AI-powered teams, the use of the GPU-powered infrastructure is no longer an option. However, is GPU computing the correct option in big data analytics? And what is the choice when it comes to a cloud GPU, a GPU-dedicated server, or a traditional one?

What Is a Cloud Server?

A cloud server refers to a virtual computing platform that operates on physical hardware with high performance and is located in a data center. Unlike other on-premise server solutions, a cloud server allows you to scale resources – CPU, memory, storage, and GPU on demand.

The teams do not need to spend a lot of money on hardware upfront in case they can spin up infrastructure within minutes and can only pay based on usage. This scalability ensures that cloud servers are suitable to support workloads that move quickly, like AI training, analytics, and processing of big data.

Server virtualization in cloud computing is important in the modern setting. It enables the running of many virtual machines on the same hardware with the aim of saving on costs thus, it is more efficient.

Where Cloud GPU Fits Into Big Data Workloads

A cloud GPU incorporates dedicated processing to a cloud server. GPUs are also provided to support thousands of parallel operations, and thus they are ideal to:

- Big data analytics

- Training of machine learning models.

- AI inference at scale

Image, video and language processing.

GPUs are efficient in handling high volumes of data in comparison to CPUs. It is the reason why the majority of modern AI platforms are based on NVIDIA GPU architectures to perform workloads that are performance-critical.

Indicatively, it can take hours to run a recommendation model with CPUs but only minutes with GPUs. The effect of this difference in performance has a direct influence on cost efficiency and productivity.

Why Server Virtualization Matters in Cloud Computing

The server virtualization in cloud computing enables organizations to isolate workloads but share common hardware effectively. This strategy has a number of benefits:

- Isolation: All workloads are isolated, and they enhance security.

- Elasticity: Instantly increase or decrease the scale of resources.

- More effective use: No gray box hardware lying idle.

- Rapid deployment: Within minutes

- Quick deployment: Within minutes

In combination with GPUs, virtualization allows the teams to execute multiple workloads of AI at the same time and avoid bottlenecks.

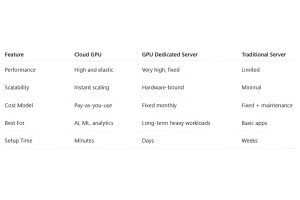

Cloud GPU vs GPU Dedicated Server vs Traditional Server

A GPU-dedicated server works well when workloads are constant and predictable. However, for teams that need flexibility, fast provisioning, and cost control, a cloud GPU offers a better balance.

The Role of NVIDIA GPUs in Modern Cloud Computing

The majority of high-performance cloud environments use NVIDIA GPU technology because it has a well-developed ecosystem and is compatible with software. There are frameworks such as TensorFlow, PyTorch, and CUDA that run on NVIDIA hardware, and thus it becomes easier to develop and scale AI applications.

In the case of companies that deal with huge data volumes, the selection of the appropriate style of GPUs directly influences the rate of training, the performance of inference, and the cost of operation.

How to Choose the Right Setup for Your Workload

The following should be looked into before choosing a cloud platform:

• Type of workload: AI training, inference, analytics, or mixed workloads.

• Performance requirements: Graphics memory, computing units, and networking.

• Scalability: Instant scalability of the type up and down.

• Accountability of the cost: Open pricing without underhand charges.

• Reliability: uptime and infrastructure stability supported by SLA.

• Support: Technical support on demand.

The proper selection of a cloud server and type of graphics card and pricing model will guarantee efficiency in the long term.

Why Teams Choose inhosted.ai

inhosted.ai is designed to run high-performance, reliable, and non-complicated GPU infrastructure. It offers:

GPU-powered cloud servers can be provisioned fast.

• Transparent pricing in INR

• High Availability and Determined Uptime Guarantees.

• AI training, inference, and data analytics workloads.

• Scaling The flexibility to scale without long-run lock-ins.

Inhosted.ai can also be used to move faster without stability issues, whether you are running experiments or deploying production workloads.

Final Thoughts

And hence, can GPU computing be used in big data analytics? Of course, when done right.

An architected cloud server that is accelerated with a graphics card will allow insights to be made faster, more scalable, and less operationally frictional. With a combination of the appropriate infrastructure and clear cost control and flexibility, teams can access the complete potential of data-driven innovation.

When you are considering your next infrastructure decision, it is the moment to consider cloud GPUs and determine how it can be incorporated into your analytics strategy.

Ready to get started? Assemble your GPU in a few minutes and develop with certainty on inhosted.ai.

FAQs:

1. What is a cloud-based computer, and how does it assist in analytics?

A cloud GPU is a device that links graphic processors with high analytical ability to a cloud server to enable a team to speed up the processing of data and analytics of vast quantities of information. It offers the parallel processing capability required in strenuous tasks such as AI inference or large-scale training of models without necessarily purchasing hardware initially.

2. Will big data analytics become quicker with the help of GPU computing?

Yes. The nature of GPUs is to compute a large number of computations simultaneously, and analytics of large data sets can be accomplished much faster than in CPU-only systems. GPUs can cut down processing time by a significant margin on workloads that require parallel computations, such as deep learning inference or real-time analytics.

3. Should I have a cloud server and a GPU cloud server to perform data analytics?

When your analytics processes involve AI, machine learning, or GPU-optimized libraries, a GPU cloud server will be the best choice since it offers high capability with scalable performance. A standard cloud server would be sufficient to run lighter analytics or to run the traditional ETL jobs. It has to do with the alignment of infrastructure to the complexity of your workload.

4. What is the difference between cloud GPU and the dedicated GPU server?

Cloud GPU will provide an option to spin up and spin down GPU capacity when you need it and when you do not need it, which can be more cost-effective with a variable workload. A dedicated server with a GPU provides you with consistent hardware, which could be appropriate in long-term and high-usage projects. The most suitable one is dependent on workload trends and budget.

5. Is it possible to implement NVIDIA GPUs in the cloud with big data analytics?

Absolutely. NVIDIA GPUs are popular in cloud platforms since they can be used in various analytics and AI systems. They assist in enhancing the speed of big data processing, training models, and inference because they are more effective at managing parallel processes than the CPUs themselves.